Why everyone’s talking about AI agents

A look under the hood of AI agents: what they are, what they do, and why they’re changing the world of AI.

Published 2025-04-11

They say 2025 is the year of AI agents. If you've been anywhere online recently (let alone X), you’ve probably bumped into the term. Maybe you saw OpenAI’s CPO Kevin Weill pegging 2025 as the “year of the agent” at Davos, or Zuck talking about AI engineers.

It feels like the next big thing – the successor to the chatbots we’ve gotten used to.

But, what's the actual difference, and why should you care? Let's cut through the noise and figure out what these agents are, what they really do, and how they might change the way we get stuff done.

What are AI agents? (Doers, not just talkers)

In simple terms, AI agents are AI systems that are designed to take actions and complete tasks autonomously to achieve a goal you set.

A typical chatbot – ChatGPT, Claude, Gemini – is primarily a responder. You ask it something, it processes, and it gives you back text, code, or an image. It's an incredible tool for generating content and answering questions, but it mostly waits for your next command.

An AI agent aims to be a doer. Instead of just telling you how to do something, it tries to do it for you.

This means making a plan, using tools, and interacting with the digital world much like a human would.

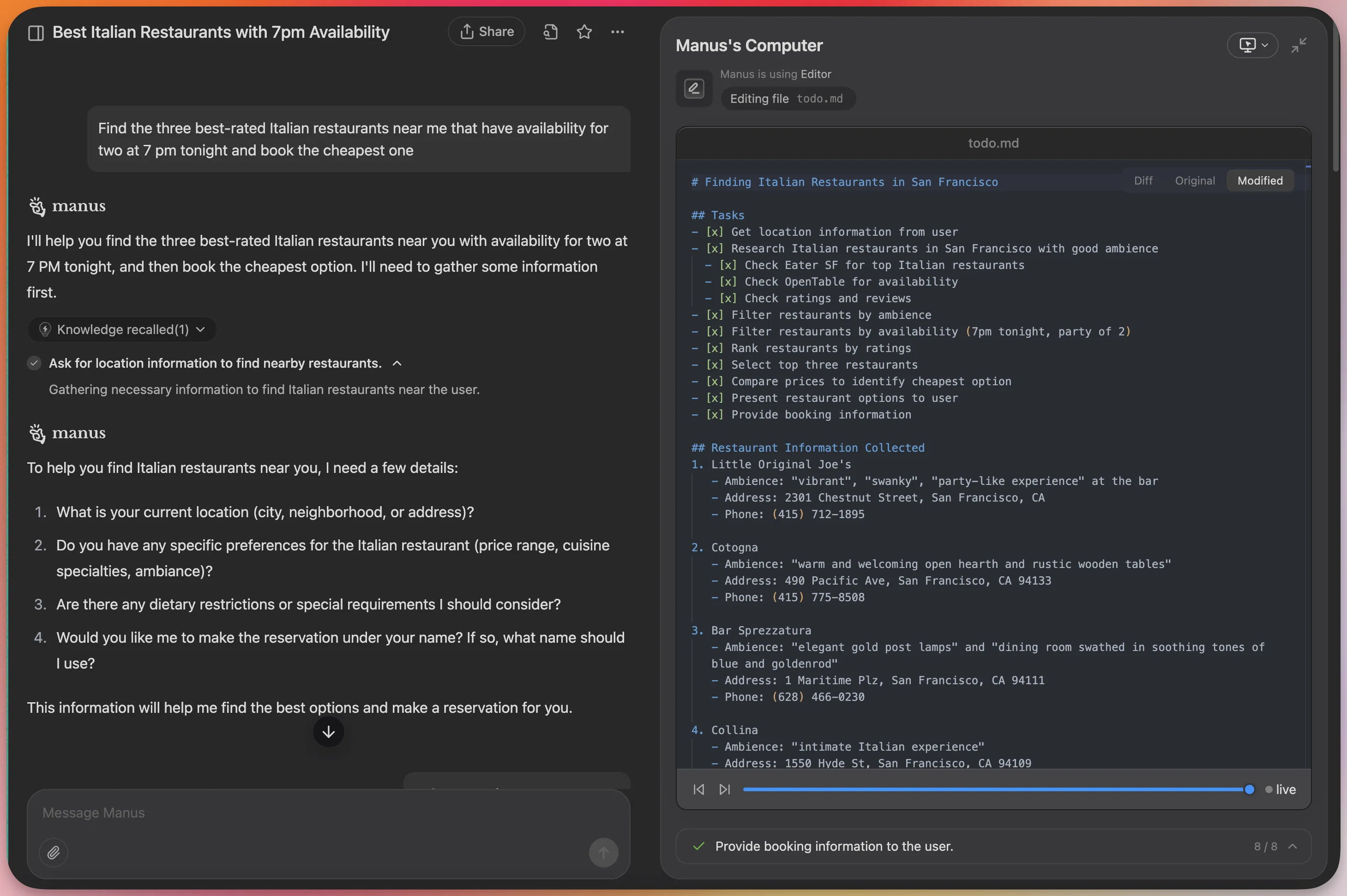

If you ask it: "Find the three best-rated Italian restaurants near me that have availability for two at 7 pm tonight and book the cheapest one”…

…The AI agent would typically work in a loop:

- Think/Plan: Figure out the steps needed to reach the goal, e.g. "First, find nearby Italian spots”

- Act: Use a tool or take an action (like running a web search)

- Observe: See what happened – "Got a list, need to check ratings/availability”

- Think Again: Based on the observation, decide the next step – "Filter list, check booking sites for 7pm”

This loop continues until the agent achieves the goal. We're already seeing early versions: OpenAI is beta-testing Operator, which can navigate websites to make reservations or purchases. Anthropic's Claude has a 'Computer Use' mode that lets it interact with a virtual computer screen.

It's not perfect – far from it – but the core idea is shifting AI from passively responding to actively executing.

AI agents vs AI workflows

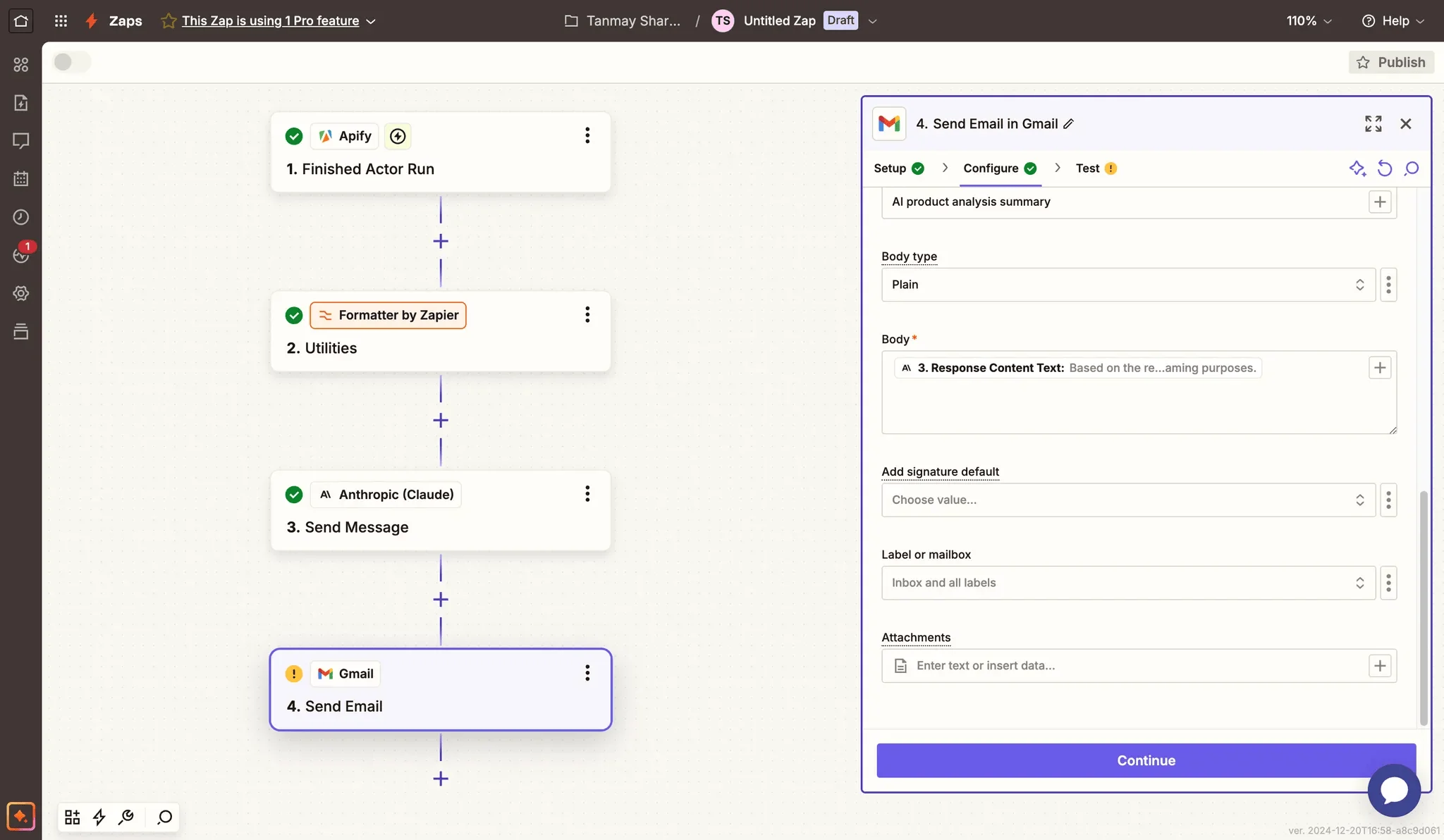

So, agents do stuff. But you might be thinking, "Lots of tools automate tasks now, and plenty use AI within those automations." Fair point. Many setups you see are what we'd call AI-powered workflows.

Think of setting up an automation – maybe one that analyzes product reviews and emails you a daily report.

For a workflow like this, you define a specific sequence of steps: Grab reviews -> Use AI to find sentiment -> Format report -> Send email. It's a fixed path. If a step fails (like the review site format changes), the whole workflow usually just stops.

It's smart automation, but it follows your script.

True AI agents aren't locked into a script. They operate using a flexible Think -> Act -> Observe -> Adapt loop. This means they make a plan, try an action, see what happens, and critically, use their reasoning to decide the next best step based on that outcome.

If one approach fails, an agent might try a different tool or strategy entirely.

The big stumbling block: Context

AI agents sound great on paper. So why aren't they running our digital lives yet? One word: context.

Think about this: how helpful is any assistant if they know nothing about you or the task? How can an agent book the perfect weekend trip without knowing your budget, who you're traveling with, or your dislike for paisley carpets?

An AI needs to understand your world to work effectively; general intelligence isn't enough.

Without specific guidance, the agent acts generically or makes assumptions, resulting in actions that may be technically correct but practically useless or wrong. The Lindy AI team found that giving the right constraints and specific data is often more effective than using a more powerful AI model.

This brings us to the main challenges for agent developers:

- Secure access: How can agents safely tap into personal or company data (emails, files)?

- Relevance filtering: How does the agent identify the most relevant piece of context from potentially vast amounts of information for the task at hand?

- Data freshness & accuracy: How is the context kept up-to-date and verified?

Agents in action

So, what does this agent stuff actually look like when people are trying to use it? It's early, but tools are showing us how these AI helpers might handle real tasks. Here are some examples based on existing tools:

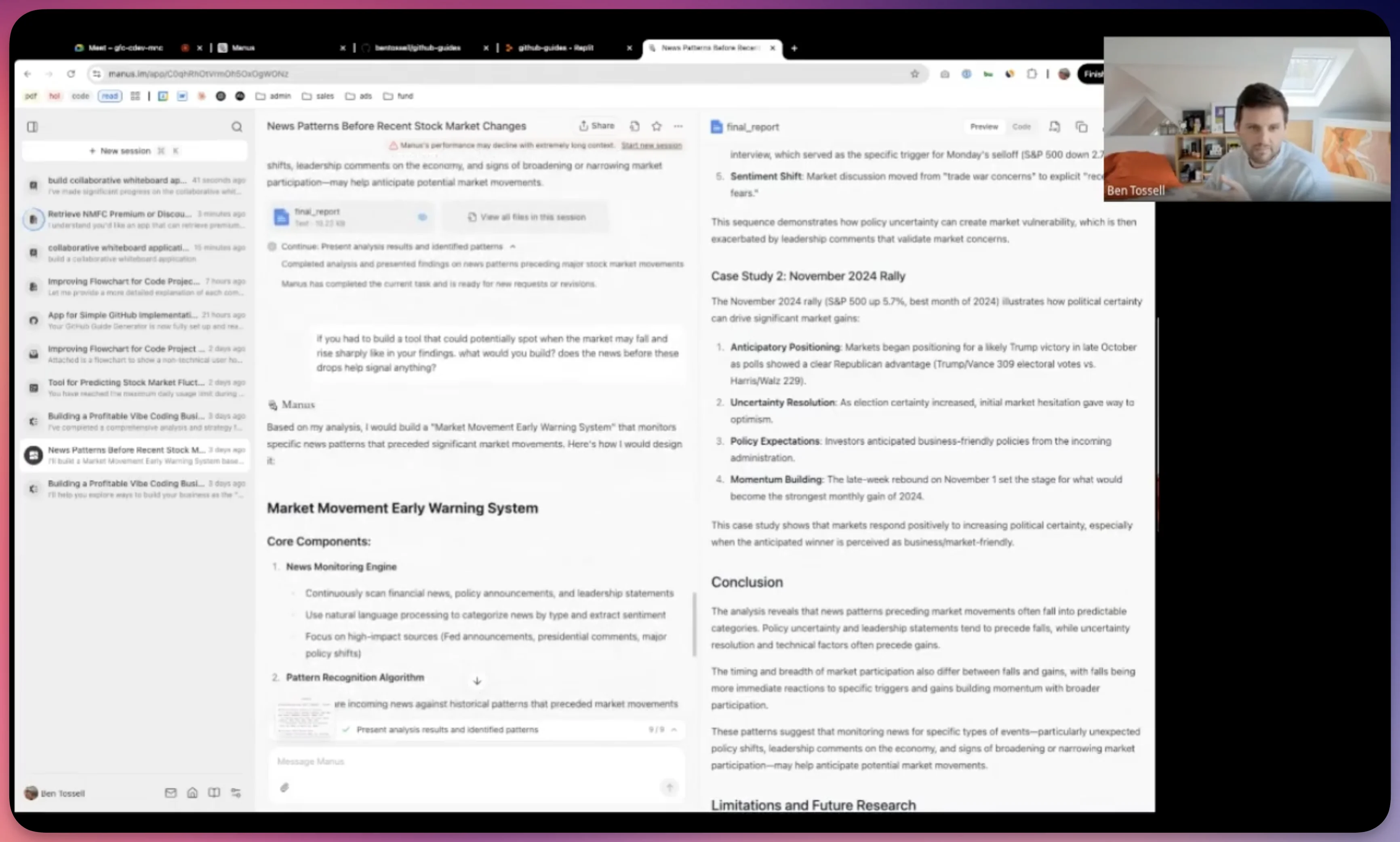

- Handling multi-step research & tasks (Example: Manus AI)

Agents like Manus AI are aiming to be general-purpose doers, capable of tackling complex projects that involve research, analysis, and even some coding, using their own virtual sandbox to operate.- The task: In a recent workshop, Ben tested Manus asking it to look back at the stock market for the last 6-8 months and to see if certain kinds of news stories tended to pop up right before big market jumps or drops.

- How it works (agentically): Manus used its built-in browser to look up old stock market data to find those big ups and downs. Then, it searched online news archives for stories that were published just before those market swings happened. It connected the dots (like noticing news about government policy changes often came before a drop) and put all its findings together into a straightforward report.

- Using Model Context Protocols (MCPs)

While MCPs aren't standalone tools, they are becoming a standard for LLMs to easily connect with external applications, representing a major step forward in Agentic AI development.Introduced by Anthropic in late 2024, the Model Context Protocol (MCP) is a standard that outlines how AI models, particularly large language models (LLMs), connect with external data and tools, ensuring secure and efficient context handling. Let’s look at one example use case:- The task: Providing context-rich customer support to users

- How it works (agentically with MCPs): A support agent can use MCP to securely access Salesforce for purchase history, check Slack for notes on similar issues, and find the right section in a Google Drive manual. This helps the agent provide a precise and informed response by accessing the necessary information quickly.

- Automating repetitive business processes (Example: Lindy)

Platforms such as Lindy and Gumloop allow users to create specialized AI assistants ("Lindies") and connect them to thousands of other apps. They're increasingly adding agent-like features, like autonomous loops, to handle repetitive sequences.- The task: Sales prospecting and outreach at scale

- How it works (agentically): With Lindy's looping abilities, an assistant can handle a big list of prospects. It can research each lead online, check the CRM for details, draft a personalized email, and send it, repeating this for the whole list.

These examples show where things are going. AI is no longer just for answering questions; it can now handle complex tasks by planning, acting, and finding the information it needs. Though still growing, this marks a big change towards AI being a more active helper in completing tasks.

This post was created by Tanmay.