Design statistically sound A/B tests using AI

Learn how to design, analyze and interpret A/B tests with AI chat assistant Claude.

2024-11-13

A/B testing is a powerful method for making data-driven decisions across various domains, from website design to marketing campaigns and product development. However, designing and interpreting these tests correctly can be challenging. Thankfully, AI can now assist in creating statistically sound A/B tests, helping you make more informed decisions with confidence.

Whether optimizing websites, refining marketing strategies, or improving product designs, the principles and workflows you'll learn in this tutorial will serve you well in any A/B testing scenario. In this tutorial, we’ll use a fictional company, Spriggle, to demonstrate A/B testing its landing page for email subscribers.

In this tutorial, you will learn how to:

- Define clear objectives for your A/B test

- Determine appropriate sample sizes and test durations

- Design effective variations for comparison

- Analyze results and interpret statistical significance

- Make data-driven decisions based on test outcomes

Let's dive in.

Step 1: Define your A/B test objectives

Open up Claude and use this prompt first to define our test objectives:

I need to conduct an A/B test for [brief description of your project or goal]. Please help me define clear objectives for this test. Consider the following:

1. The overall goal of the test (e.g., increase conversion rate, improve user engagement)

2. The specific element or variable we're testing

3. The metric(s) we'll use to measure success

4. Any constraints or considerations specific to our situation

Based on this information, provide an objective report for our A/B test.

Step 2: Determine sample size and test duration

The sample size refers to the number of users or data points you need in each variation of your test to detect a statistically significant difference. The test duration is how long you need to run your test to achieve this sample size.

Let's ask Claude to help determine our sample size and test duration:

Based on our A/B test objective, please help me determine the appropriate sample size and test duration. Consider the following:

1. Our current traffic or user base size: [insert your estimate]

2. The minimum detectable effect we're interested in: [insert percentage, e.g., 5% improvement]

3. Desired confidence level: [typically 95% or 99%]

4. Statistical power: [typically 80% or 90%]

Provide recommendations for:

1. Required sample size for each variation

2. Estimated test duration based on our current traffic/user base

3. Any adjustments we should consider based on our specific situation

Step 3: Design effective variations

Designing effective variations is where the art and science of A/B testing truly come together. This step requires creativity, strategic thinking, and a deep understanding of your audience and business goals. The variations you design will directly impact the insights you can gain from your test, so it's crucial to approach this step thoughtfully.

Let's ask Claude to help us design our test variations:

For our A/B test, we need to design effective variations. Please help me create these variations based on the following information:

1. Our current version (Control - A): [describe the current version]

2. The element we're testing: [e.g., CTA button, headline, layout]

3. Our hypotheses about what might improve performance

4. Any brand guidelines or constraints we need to consider

Please provide:

1. A description of the Control (A) version

2. 2-3 variations (B, C, D) with clear descriptions of how they differ from the control

3. Rationale for each variation, explaining why it might perform better

Step 4: Monitor and analyze results

Monitoring and analyzing your A/B test results is where you begin to unlock the value of your testing efforts. This step involves more than just looking at which variation "won." It's about understanding the nuances of how different variations are performed, identifying patterns and trends, and extracting insights that can inform future decisions.

Prompt Claude with:

Our A/B test has been running for [insert duration]. Please help me analyze the results with the following information:

1. Metrics for each variation: [insert key metrics for each variation]

2. Sample size for each variation

3. Our original hypothesis

Please provide:

1. An analysis of statistical significance for each variation compared to the control

2. Interpretation of the results in plain language

3. Any trends or patterns observed in the data

4. Recommendations on whether to conclude the test or continue running it

Step 5: Draw conclusions and plan next steps

The final step in your A/B testing process is perhaps the most crucial: drawing meaningful conclusions and planning your next steps. This is where you translate your test results into actionable insights and future strategies. It's not just about implementing the "winning" variation, but about learning from the entire process and using those learnings to inform your ongoing optimization efforts.

When drawing conclusions from your A/B test, consider the following:

- Holistic Evaluation: Look at the full picture, not just the primary metric. How did the variations affect other important metrics? Were there any unexpected side effects?

- Context Consideration: Interpret your results within the broader context of your business goals, user behavior trends, and external factors that might have influenced the test.

- Hypothesis Validation: Reflect on your original hypotheses. Were they confirmed or disproven? What does this tell you about your understanding of your users?

- Segmentation Insights: Analyze how different user segments responded to the variations. This can uncover opportunities for personalization or reveal new user personas.

- Long-term Implications: Consider the long-term impact of implementing changes based on your test results. Will the improvements be sustainable? Are there any potential negative consequences?

Let's ask Claude to help us conclude our test and plan next steps:

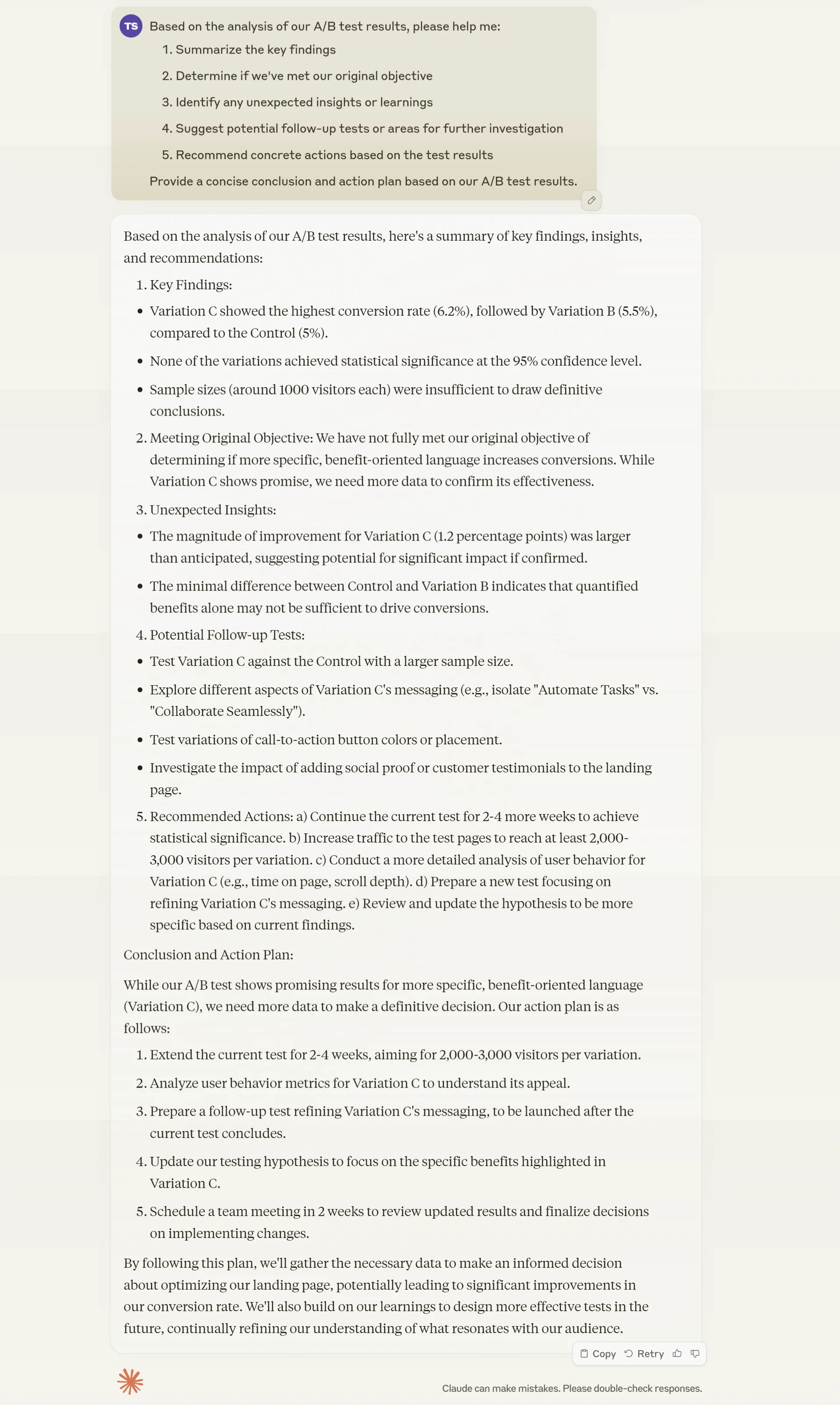

Based on the analysis of our A/B test results, please help me:

1. Summarize the key findings

2. Determine if we've met our original objective

3. Identify any unexpected insights or learnings

4. Suggest potential follow-up tests or areas for further investigation

5. Recommend concrete actions based on the test results

Provide a concise conclusion and action plan based on our A/B test results.

Review the AI's suggestions and adjust based on your business knowledge and constraints. Remember, A/B testing is an iterative process, and each test should inform your future strategies and tests.

A/B testing is not a one-time event but an ongoing process of optimization. Use the insights gained from this test to inform your future experiments and continue refining your approach. With practice and persistence, you'll be able to make increasingly data-driven decisions that drive real improvements in your key metrics.

This tutorial was created by Tanmay.