Prompt mechanics for high-quality output

Learn popular prompting techniques: zero-shot, few-shot, chain-of-thought, context-aware, and RAG.

2024-11-13

This will be a slightly different tutorial than most of the ones on our platform. Instead of walking through a specific AI tool and workflow, we’ll be reviewing the mechanics and theory behind various prompting techniques.

In this tutorial, we’ll discuss these promoting techniques:

- Zero-shot

- Few-shot

- Chain-of-thought

- Context-aware

- Retrieval augmented generation (RAG)

Chances are, if you’ve worked with LLMs or used a prompt-based tool, you’ve used many, if not all, of these prompting techniques. We’ll be using ChatGPT in our example prompts if you want to follow along.

The list of techniques in this tutorial is non-exhaustive; instead, we tried to gather the most popular techniques when working with an AI chatbot at the interface level (not at the development level). The goal of this tutorial is to guide you on when it might be helpful to use a certain prompting technique to improve your prompt-based workflows.

Let’s dive in.

1. Zero-shot prompting

Zero-shot prompting is the most basic type of prompt. It involves requesting something from an LLM without providing any examples or context.

Essentially, when doing a zero-shot prompt, you’re relying on the training data of the LLM. In earlier LLM models (i.e. GPT-2), you’d get much worse results with this method vs. today’s leading models (i.e. GPT-4o). So from our point of view, while zero-shot prompting is the simplest method, it’s often all you need for generalized LLM tasks.

There isx typically one part to a zero-shot prompt:

- Define the task / ask the model: Clearly describe the task or question in the prompt and ask the model to generate a response based on its training.

Zero-shot prompting is best for certain applications:

- Language translation

- Text summarization

- Basic question answering

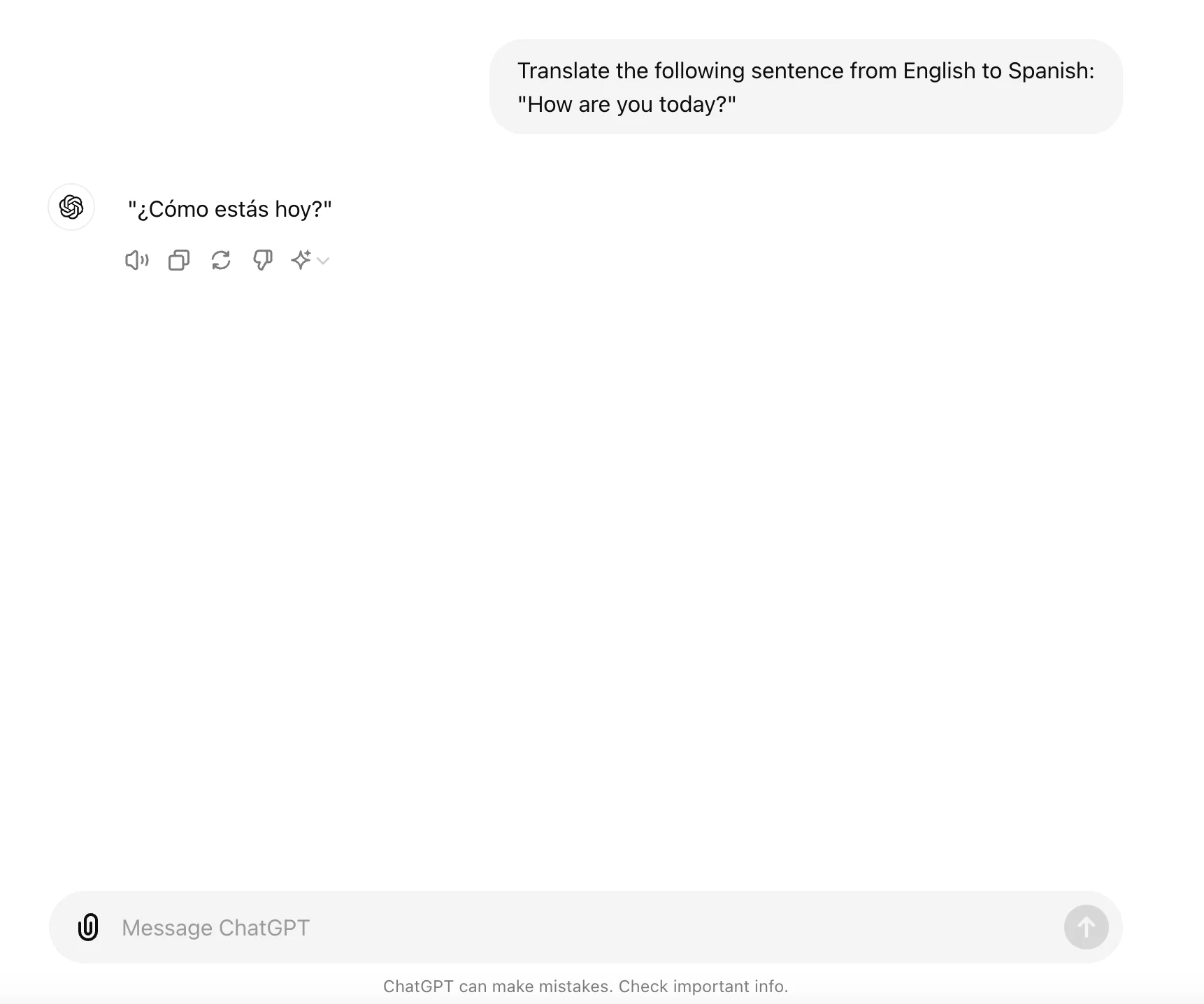

Example zero-shot prompt:

Translate the following sentence from English to Spanish:

"How are you today?"

2. Few-shot prompting

Few-shot prompting involves giving the model only a few examples (or shots) of the desired input-output behavior within the prompt.

As you can tell from the name, this is more complex than zero-shot prompting. We’re providing not only context and a query to the model, but also a list of examples to help guide the model on our thinking.

There are typically three parts to a few-shot prompt:

- Set up the task: You start by clearly defining the task you want the model to perform. For instance, if you want the model to label specific data, you set up the context for that task.

- Provide examples: Within the prompt, you include a small number of examples that show how the input should be mapped to the output. This could be a one-shot (one example) or a few-shot (a few examples).

- Ask the model: After providing the examples, you then present a new instance(s) for the model to process. The model uses the pattern demonstrated by the examples to generate the appropriate output.

Few-shot prompting is best for certain applications:

- Sentiment analysis

- Data extraction

- Data labelling

Example few-shot prompt:

Convert the following numbers from Coolios to FlopFlops:

Example 1:

Coolio: 0

FlopFlop: 6

Example 2:

Coolio: 12

FlopFlop: 18

Now, convert this number:

Coolio: 24

FlopFlop:

.webp)

3. Chain-of-thought prompting

Chain-of-thought prompting is a technique where the model is guided to generate intermediate reasoning steps before arriving at the final answer. This method helps the model to break down complex problems into simpler sub-problems, allowing for more accurate and explainable responses.

There are typically three parts to a chain-of-thought prompt:

- Define the task: Clearly describe the task or question in the prompt.

- Guide the model: Encourage the model to explicitly reason through the problem by generating a sequence of logical steps.

- Generate the final answer: After reasoning through the steps, the model arrives at the final answer.

Chain-of-thought is best for certain applications:

- Logical reasoning tasks

- Complex decision-making processes

- Complex content generation

- Reading comprehension and answering complex questions

Example chain-of-thought prompt:

Generate a business proposal by following the provided steps.

Step 1: Understand the client's needs. Research the client's business and gather information about their needs, challenges, and goals.

Step 2: Define the solution. Clearly outline the products or services you are offering and explain how they will address the client's needs.

Step 3: Develop a structure. Create a clear structure for your proposal, including an introduction, background information, proposed solution, timeline, pricing, and terms and conditions.

Step 4: Write the introduction. Introduce your company and provide an overview of the proposal's purpose.

Step 5: Present the background information. Provide context about the client's business and the problem you are addressing.

Step 6: Detail the proposed solution. Explain your proposed solution in detail, including the benefits and how it will be implemented.

Step 7: Create a timeline. Outline the key milestones and deliverables, along with the projected timeline for completion.

Step 8: Provide pricing information. Include a detailed breakdown of costs, any payment terms, and any additional fees.

Step 9: Outline the terms and conditions. Specify the terms and conditions, including any legal or contractual obligations.

Step 10: Review and edit. Carefully review the proposal for accuracy, clarity, and professionalism. Edit as necessary.

Step 11: Submit the proposal. Provide the completed proposal and be available for any follow-up questions.

.webp)

.webp)

4. Context-aware prompting

Context-aware prompting is a technique where the model is provided with detailed context or background information within the prompt to help it generate more accurate and relevant responses. This approach leverages the model's ability to understand and utilize context to improve the quality of its outputs.

There are typically three parts to a context-aware prompt:

- Define the task: Clearly describe the task or question you want the model to perform or answer.

- Provide detailed context: Include relevant background information or context that can help the model understand the task better.

- Ask the model: After providing the context, ask the model to perform the task or answer the question based on the given information.

Context-aware prompts are best for certain applications:

- Content generation

- Context-specific problem-solving

- Scenario planning

Example context-aware prompt:

Context:

In a small medieval village nestled at the foot of a mountain, the villagers live in fear of a dragon that resides in a nearby cave. Every year, they must offer a tribute to the dragon to prevent it from attacking the village. The story follows a young hero who decides to confront the dragon and end its reign of terror.

Generate the beginning of the story.

.webp)

5. Retrieval-augmented generation (RAG) prompting

RAG (Retrieval-augmented generation) prompting is a technique that combines retrieval-based models with generative models to improve performance and accuracy.

The RAG approach involves retrieving relevant information from a knowledge base or external documents and using this information to generate more informed and accurate responses.

Oftentimes, when working with an AI chatbot, this will look like providing it with external documents or pointing it to an external database of information.

There are typically four parts to a RAG prompt:

- Input query: You provide a query or prompt with a link to or uploaded external knowledge source.

- Retrieval step: The model searches the knowledge base, database, or document corpus to find relevant pieces of information related to the query.

- Augmentation: The retrieved information is combined with the original query to create an enhanced prompt.

- Generation step: The enhanced prompt is fed into a generative model, which produces a response using both the query and the augmented information.

RAG is best for certain applications:

- Domain-specific question-answering

- Document summarization

- Content-specific generation

Example RAG prompt:

Provide feedback on my below article based on the attached editorial guidelines.

[attach editorial guidelines], [attach article]

.webp)

This tutorial was created by Garrett.